Welcome to our channel, where we delve into the fascinating world of language models! In this video, we explore Guanaco 65b, a remarkable model family that leverages the groundbreaking Quantized Low-Rank Adapters (QLoRA) approach to achieve efficient finetuning of quantized Language Models (LLMs). If you're interested in cutting-edge AI techniques and improving model performance, you're in the right place!

[Links Used]:

☕ Buy Me Coffee or Donate to Support the Channel: – It would mean a lot if you did! Thank you so much, guys! Love yall

Follow me on Twitter:

Finetune on Google Colab:

Research Paper:

Repo:

Guanaco Demo:

GPT vs Guanaco Google Colab Demo:

QLoRa Fine Tune Tweet:

🔎 Key Takeaways from the Video:

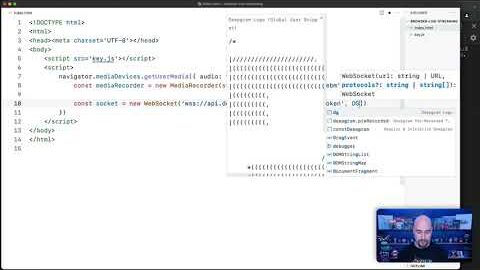

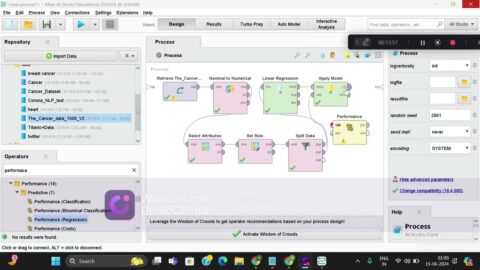

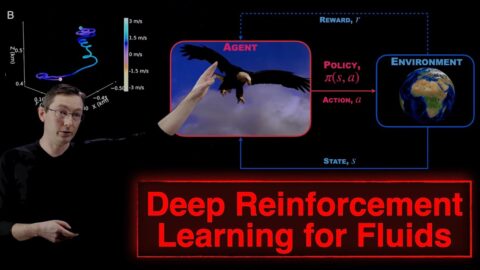

Guanaco 65b utilizes QLoRA to reduce memory usage while maintaining high performance during the finetuning process. With QLoRA, a massive 65 billion parameter model can be finetuned on just a single 48GB GPU, without compromising 16-bit finetuning task performance. QLoRA's key concept involves backpropagating gradients through a frozen, 4-bit quantized pre-trained language model into Low-Rank Adapters (LoRA), enabling efficient computation and memory utilization. By employing low-rank factorization techniques, QLoRA reduces the parameter count of adapter layers without sacrificing performance.

– The Guanaco model family represents the best-performing models resulting from the QLoRA approach, surpassing the performance of all previously released models.

– Achieving an impressive 99.3% of ChatGPT's performance level, Guanaco sets new benchmarks in language modeling.

– Notably, the finetuning process for Guanaco only requires 24 hours on a single GPU, making it a highly efficient approach given the scale and complexity of the model.

💡 Dive deeper into the world of Guanaco 65b and QLoRA as we explore the incredible advancements in language model finetuning. Gain insights into the revolutionary techniques employed by researchers to optimize memory usage and achieve outstanding performance results.

🔎 Additional Tags and Keywords:

Guanaco 65b, QLoRA approach, efficient finetuning, quantized Language Models, LLMs, memory usage, high performance, 65 billion parameter model, 48GB GPU, 16-bit finetuning, backpropagate gradients, frozen 4-bit quantized pretrained language model, Low Rank Adapters, LoRA, efficient computation, memory utilization, low-rank factorization techniques, parameter count reduction, best-performing models, Vicuna benchmark, ChatGPT, language model, finetuning process, efficient approach, AI techniques, model performance

🔖 Hashtags:

#Guanaco65b #QLoRA #LanguageModels #Finetuning #Efficiency #AI #CuttingEdge #MemoryUsage #HighPerformance #Modeling #Technology