The field of artificial intelligence (AI) has seen a remarkable growth in recent years, with significant advancements being made in areas such as machine learning, computer vision, and natural language processing. However, with these advancements come ethical concerns, particularly around the development and deployment of AI systems. The biggest problem in ethical AI is the potential for AI systems to perpetuate existing biases and inequalities.

AI systems are built by training machine learning models on large datasets, which are typically collected from human activity. These datasets can contain biases and discriminatory patterns that are reflective of the society from which they were collected. For example, a dataset used to train an AI system for facial recognition may contain more images of people from certain ethnic groups than others, leading to the system being better at recognizing faces from those groups.

If AI systems are not designed and deployed with ethical considerations in mind, they can reinforce and even amplify existing biases and inequalities. This can result in AI systems that discriminate against certain groups of people, perpetuating existing power structures and reinforcing social injustices.

To address this problem, several approaches can be taken:

Diversify the dataset: One way to address biases in AI systems is to ensure that the datasets used to train them are diverse and representative of the population they are intended to serve. This means collecting data from a wide range of sources and taking steps to ensure that the data is balanced and reflective of the diversity of the population.

Be transparent about the data and algorithms: Another approach is to be transparent about the data and algorithms used to train AI systems. This means documenting the data sources and the methods used to preprocess and clean the data. It also means being transparent about the algorithms used and how they make decisions.

Establish ethical guidelines: Organizations that develop and deploy AI systems should establish ethical guidelines that outline the principles that guide their development and use. These guidelines should be informed by principles such as fairness, accountability, and transparency, and should be regularly reviewed and updated.

Monitor and audit AI systems: Organizations should also implement monitoring and auditing systems to ensure that AI systems are operating in a manner consistent with ethical guidelines. This can involve monitoring the inputs and outputs of the system to ensure that it is not discriminating against certain groups of people.

Engage with stakeholders: Finally, organizations should engage with stakeholders, including affected communities, to ensure that AI systems are developed and deployed in a manner that is consistent with ethical principles. This can involve soliciting feedback from stakeholders and incorporating their feedback into the development and deployment process.

In conclusion, the biggest problem in ethical AI is the potential for AI systems to perpetuate existing biases and inequalities. Addressing this problem requires a multifaceted approach that includes diversifying datasets, being transparent about data and algorithms, establishing ethical guidelines, monitoring and auditing AI systems, and engaging with stakeholders. By taking these steps, organizations can ensure that AI systems are developed and deployed in a manner that is consistent with ethical principles and that does not perpetuate existing biases and inequalities.

episode 10 addressing potential ethical violations with others , addressing ethical dilemmas ethical ai issues

ai art ethical issues

ethical ai microsoft

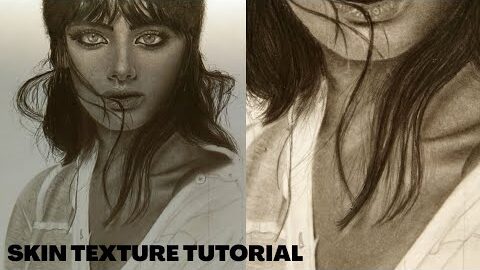

ethical ai art

ethical ai vs responsible ai

ethical ai problems

ethical ai presentation

ethics aicscc

ethics aim down

ethics aim down sights pubg xbox

ethics aim down fear