Named Entity Recognition (NER) is a subfield of natural language processing (NLP) that involves identifying and extracting important named entities (such as people, organizations, locations, dates, and numerical values) from unstructured text data. The goal of NER is to automatically identify and classify entities within a given document or corpus, which can then be used for a variety of applications, such as information retrieval, text summarization, question answering, and sentiment analysis.

NER algorithms typically involve statistical and machine learning techniques, such as rule-based systems, conditional random fields (CRFs), and deep learning models such as Bidirectional Encoder Representations from Transformers (BERT) or other similar transformer-based architectures. These algorithms use various features such as part-of-speech (POS) tagging, dependency parsing, and word embeddings to identify and classify named entities. NER is widely used in various industries, including finance, healthcare, legal, and e-commerce, to automatically extract structured information from unstructured text data.

BERT (Bidirectional Encoder Representations from Transformers) is a pre-trained deep learning model for natural language processing tasks developed by Google. BERT uses a transformer-based neural network architecture, which allows it to take into account the context of words in a sentence or text corpus when performing various NLP tasks, such as language modeling, text classification, question answering, and named entity recognition.

The transformer-based architecture used in BERT is designed to process sequential data, such as natural language text, by encoding the entire sequence of input tokens at once, allowing for better representation of long-term dependencies between tokens. BERT is pre-trained on a large corpus of text data, such as Wikipedia, and can be fine-tuned on specific downstream NLP tasks with smaller labeled datasets to achieve state-of-the-art performance.

One of the main advantages of BERT is its ability to capture the meaning of words in context, which allows it to perform well on a variety of NLP tasks, including those that require understanding of the relationship between different entities in a text, such as named entity recognition. BERT has been used in a wide range of applications, such as chatbots, sentiment analysis, document classification, and machine translation, and is widely regarded as one of the most powerful and versatile deep learning models for NLP.

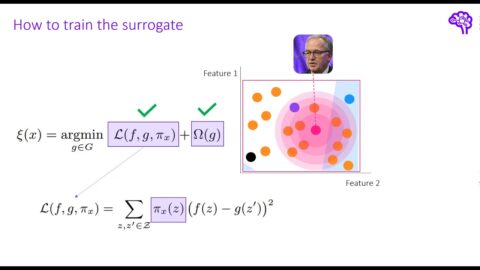

In this video, a model is used for named entity recognition (NER) tasks. NER is the process of identifying and classifying named entities (such as people, organizations, and locations) in unstructured text data. BERT is a pre-trained transformer-based neural network architecture that has achieved state-of-the-art performance in a variety of natural language processing tasks, including NER. Therefore, the video discusses how to fine-tune a pre-trained BERT model for NER on a specific dataset, as well as the benefits and limitations of using BERT for this task.

🚴🏽 About AI Studio: AI Studio is an e-learning company; the company publishes online consultancy a about data science and computer technology for anyone, anywhere. We are a group of experts, PhD students, and young practitioners of artificial intelligence, computer science, machine learning, and statistics. Initially, our objective was to help only those who wish to understand these techniques more easily and to be able to start without too much theory or lengthy reading. We will also publish books on selected topics for a wider audience.

Watch our Python Basic Tutorials:

🚴🏽 Python Complete Course

🚴🏽 Data Analysis with Python:

Read our Blogs about Python and Machine Learning :

For more details about Python and MLOPs follow me:

⚡️ LinkedIn:

⚡️ Github:

⚡️ Website:

#BERT

#Named Entity Recognition

#NER

#Natural Language Processing

#Machine Learning

#Deep Learning

#Transformers

#Artificial Intelligence

#Text Classification

#Information Extraction

#Pretrained Models

#Python

#Data Science

#Tutorial

#AI

#Deep Learning Models

#NLP Techniques

#Named Entity Recognition with BERT

#How to perform NER with BERT

#Advanced NLP

#Entity Extraction

#Tokenization

#Text Analytics

#Google Colab

#Jupyter Notebook

#Python Programming

#Machine Learning Algorithms

#Artificial Neural Networks

#Text Mining

#Information Retrieval

©2025 WordPress Video Theme by WPEnjoy