How it is possible that ChatGPT makes so many incorrect statements and spits out wrong facts? We explain why ChatGPT isn't a truth oracle.

► Sponsor: Arize AI. Sign up for Arize:

Free industry certification in ML observability:

Learn about embedding drift from Arize research:

Check out our daily #MachineLearning Quiz Questions:

📜 ChatGPT blog:

📜 Evaluating the Factual Consistency of Large Language Models Through Summarization:

📜 WebGPT: Improving the Factual Accuracy of Language Models through Web Browsing:

📜 Behavior cloning is miscalibrated:

📺 ChatGPT vs. Sparrow:

📜 Transformers as Algorithms: Generalization and Stability in In-context Learning:

📜 Do Prompt-Based Models Really Understand the Meaning of their Prompts?:

Thanks to our Patrons who support us in Tier 2, 3, 4: 🙏

Dres. Trost GbR, Siltax, Edvard Grødem, Vignesh Valliappan, Mutual Information, Mike Ton

Outline:

00:00 ChatGPT spits out wrong facts

02:15 Arize AI (Sponsor)

03:40 How does ChaGPT / a language model work?

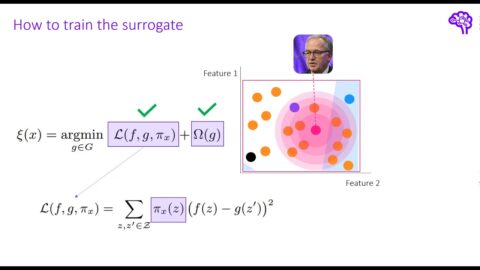

05:53 Why ChatGPT generates nonsense

06:38 Confidence and clarifications

07:21 Limits of behavioral cloning

09:04 Phrasing

09:21 Jail breaks

09:45 Is ChatGPT even usable?

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

🔥 Optionally, pay us a coffee to help with our Coffee Bean production! ☕

Patreon:

Ko-fi:

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

🔗 Links:

AICoffeeBreakQuiz:

Twitter:

Reddit:

YouTube:

#AICoffeeBreak #MsCoffeeBean #MachineLearning #AI #research

Music 🎵 : Intentions – Anno Domini Beats

Video editing: Nils Trost